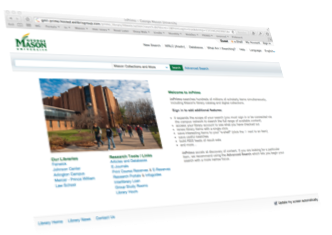

In preparation for tomorrow’s launch of inPrimo, we just added an important new service to the system: the ability to discover from within inPrimo whether any library in the WRLC holds a particular journal in their collection(s).

A few screen grabs will show how the service works:

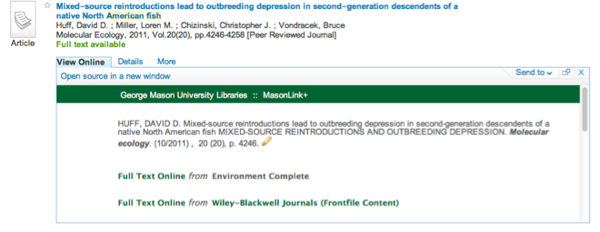

Let’s say an inPrimo search brings up this citation of interest. At this point, our user has clicked the “View Online” link and now sees an in-place-reveal window (is there a better term for this?). It is filled with information we provide based on a response from our OpenURL link resolver (360Link).

For the first of what will be a number of name checks in this post, we rely heavily on the work of Matthew Reidsma (Grand Valley State University) and 22K of local javascript to up-style and manipulate the output delivered by the 360Link resolver.

It’s easy to reflect Mason’s holdings in this environment–the “Full Text Online” links show where Mason affiliates can access content published in Molecular Ecology. Of course, there are times when Mason does not have licensed access to content which tends to complicate things.

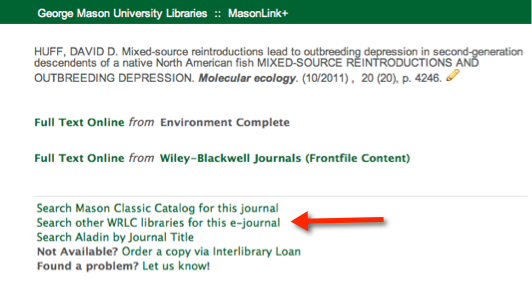

Until today, the “next best” option was opening a new browser window and manually running a search on the WRLC union catalog to see if other libraries in our consortium might hold the journal issue of interest. Or, you could click the “Search Aladin by Journal Title” and wade through the results trying to make sense of the Voyager display of individual library holdings.

As of today, we can answer that question with a single click–all without leaving inPrimo and without disturbing the results set of the search just run.

How? Here’s the more complete version of the MasonLink+ window we saw in the first image. In this view, you can see all the linking options we offer for this particular title. And yes, I did just add the large red arrow for purposes of this post…

Clicking the “Search other WRLC libraries…” link launches a Django application developed by Daniel Chudnov, Joshua Gomez, Laura Wrubel and others over at George Washington University–work done to support their any-day-now implementation of Summon. Reliably forward thinking, their software offers a really simple API and that open design is the key for our purposes. While we don’t need anything extra to identify Mason’s holdings, without the service they’ve developed we’d be without a method for seamless discovery of holdings locked inside the WRLC’s Voyager system.

On our end, a local PHP application (developed by Khatwanga Siva Kiran Seesala with a mentoring assist from our resident PHP expert Andrew Stevens) queries their “findit” service with an ISSN number and then reformats the JSON output for “pretty print” in the browser.

So, for Molecular Ecology (0962-1083), the end result looks a bit like the image below. The output is a long scrollable display but I’ve shortened it here to show just a couple of the libraries. Notice for print runs we offer an SMS message link (to send yourself the metadata you need if traveling to another library to retrieve the journal). We also offer a “Request” link to use the Consortium Loan Service (for delivery of hard copy).

We’ve styled the service to run within the inPrimo environment, but supply an ISSN and it works fine by itself. Remember Dr. Dobbs Journal (ISSN 1044-789X)?

Wonder if any library in the Washington Research Library Consortium has a copy?

http://infowiz.gmu.edu/findit/?issn=1044-789X